spectrochempy.NDDataset¶

- class NDDataset(data=None, coordset=None, coordunits=None, coordtitles=None, **kwargs)[source]¶

The main N-dimensional dataset class used by

SpectroChemPy.The

NDDatasetis the main object use by SpectroChemPy. Like numpyndarrays,NDDatasethave the capability to be sliced, sorted and subject to mathematical operations. But, in addition,NDDatasetmay have units, can be masked and each dimensions can have coordinates also with units. This makeNDDatasetaware of unit compatibility, e.g., for binary operation such as additions or subtraction or during the application of mathematical operations. In addition or in replacement of numerical data for coordinates,NDDatasetcan also have labeled coordinates where labels can be different kind of objects (str,datetime,ndarrayor otherNDDataset‘s, etc…).- Parameters

data (array-like) – Data array contained in the object. The data can be a list, a tuple, a

ndarray, a subclass ofndarray, anotherNDDatasetor aCoordobject. Any size or shape of data is accepted. If not given, an emptyNDDatasetwill be inited. At the initialisation the provided data will be eventually cast to andarray. If the provided objects is passed which already contains some mask, or units, these elements will be used if possible to accordingly set those of the created object. If possible, the provided data will not be copied fordatainput, but will be passed by reference, so you should make a copy of thedatabefore passing them if that’s the desired behavior or set thecopyargument toTrue.coordset (

CoordSetinstance, optional) – It contains the coordinates for the different dimensions of thedata. ifCoordSetis provided, it must specify thecoordandlabelsfor all dimensions of thedata. Multiplecoord‘s can be specified in aCoordSetinstance for each dimension.coordunits (

list, optional, default:None) – A list of units corresponding to the dimensions in the order of the coordset.coordtitles (

list, optional, default:None) – A list of titles corresponding of the dimensions in the order of the coordset.**kwargs – Optional keyword parameters (see Other Parameters).

- Other Parameters

dtype (

strordtype, optional, default:np.float64) – If specified, the data will be cast to this dtype, else the data will be cast to float64 or complex128.dims (

listofstr, optional) – If specified the list must have a length equal to the number od data dimensions (ndim) and the elements must be taken amongx,y,z,u,v,w or t. If not specified, the dimension names are automatically attributed in this order.name (

str, optional) – A user-friendly name for this object. If not given, the automaticidgiven at the object creation will be used as a name.labels (array-like of objects, optional) – Labels for the

data. labels can be used only for 1D-datasets. The labels array may have an additional dimension, meaning several series of labels for the same data. The given array can be a list, a tuple, andarray, a ndarray-like, aNDArrayor any subclass ofNDArray.mask (array-like of

boolorNOMASK, optional) – Mask for the data. The mask array must have the same shape as the data. The given array can be a list, a tuple, or andarray. Each values in the array must beFalsewhere the data are valid and True when they are not (like in numpy masked arrays). Ifdatais already aMaskedArray, or any array object (such as aNDArrayor subclass of it), providing amaskhere, will cause the mask from the masked array to be ignored.units (

Unitinstance orstr, optional) – Units of the data. If data is aQuantitythenunitsis set to the unit of thedata; if a unit is also explicitly provided an error is raised. Handling of units use the pint package.timezone (

datetime.tzinfo, optional) – The timezone where the data were created. If not specified, the local timezone is assumed.title (

str, optional) – The title of the data dimension. Thetitleattribute should not be confused with thename. Thetitleattribute is used for instance for labelling plots of the data. It is optional but recommended to give a title to each ndarray data.meta (

dict-like object, optional) – Additional metadata for this object. Must be dict-like but no further restriction is placed on meta.author (

str, optional) – Name(s) of the author(s) of this dataset. By default, name of the computer note where this dataset is created.description (

str, optional) – An optional description of the nd-dataset. A shorter alias isdesc.origin (

str, optional) – Origin of the data: Name of organization, address, telephone number, name of individual contributor, etc., as appropriate.roi (

list) – Region of interest (ROI) limits.history (

str, optional) – A string to add to the object history.copy (

bool, optional) – Perform a copy of the passed object. Default is False.

Notes

The underlying array in a

NDDatasetobject can be accessed through thedataattribute, which will return a conventionalndarray.Attributes Summary

The array with imaginary-imaginary component of hypercomplex 2D data.

The array with imaginary-real component of hypercomplex 2D

data.The array with real-imaginary component of hypercomplex 2D

data.The array with real component in both dimension of hypercomplex 2D

data.Transposed

NDDataset.Acquisition date.

Creator of the dataset (str).

The main matplotlib axe associated to this dataset.

The matplotlib axe associated to the transposed dataset.

Matplotlib colorbar axe associated to this dataset.

Matplotlib colorbar axe associated to the transposed dataset.

Matplotlib projection x axe associated to this dataset.

Matplotlib projection y axe associated to this dataset.

Provides a comment (Alias to the description attribute).

List of the

Coordnames.CoordSetinstance.List of the

Coordtitles.List of the

Coordunits.Creation date object (Datetime).

The

dataarray.Provides a description of the underlying data (str).

True if the

dataarray is dimensionless - Readonly property (bool).Names of the dimensions (list).

Current directory for this dataset.

Matplotlib plot divider.

Return the data type.

Matplotlib figure associated to this dataset.

Matplotlib figure associated to this dataset.

Current filename for this dataset.

Type of current file.

True if at least one of the

dataarray dimension is complex.True if the

dataarray is not empty.True is the name has been defined (bool).

True if the

dataarray have units - Readonly property (bool).Describes the history of actions made on this array (List of strings).

Object identifier - Readonly property (str).

The array with imaginary component of the

data.True if the

dataarray has only one dimension (bool).True if the 'data' are complex (Readonly property).

True if the

dataarray is empty or size=0, and if no label are present.True if the

dataare real values - Readonly property (bool).True if the

dataare integer values - Readonly property (bool).True if the

dataarray is hypercomplex with interleaved data.True if the

dataarray have labels - Readonly property (bool).True if the

dataarray has masked values.True if the

dataarray is hypercomplex .Range of the data.

Return the local timezone.

Data array (

ndarray).Data array (

ndarray).Mask for the data (

ndarrayof bool).The actual masked

dataarray - Readonly property (numpy.ma.ndarray).Additional metadata (

Meta).ndarray- models data.Date of modification (readonly property).

A user-friendly name (str).

A dictionary containing all the axes of the current figures.

The number of dimensions of the

dataarray (Readonly property).Origin of the data.

Projectinstance.Metainstance object - Additional metadata.The array with real component of the

data.Region of interest (ROI) limits (list).

A tuple with the size of each dimension - Readonly property.

Size of the underlying

dataarray - Readonly property (int).Filename suffix.

Return the timezone information.

An user-friendly title (str).

The actual array with mask and unit (

Quantity).bool- True if thedatadoes not haveunits(Readonly property).Unit- The units of the data.Alias of

values.Quantity- The actual values (data, units) contained in this object (Readonly property).Methods Summary

abs(dataset[, dtype])Calculate the absolute value of the given NDDataset element-wise.

absolute(dataset[, dtype])Calculate the absolute value of the given NDDataset element-wise.

add_coordset(*coords[, dims])Add one or a set of coordinates from a dataset.

align(dataset, *others, **kwargs)Align individual

NDDatasetalong given dimensions using various methods.all(dataset[, dim, keepdims])Test whether all array elements along a given axis evaluate to True.

amax(dataset[, dim, keepdims])Return the maximum of the dataset or maxima along given dimensions.

amin(dataset[, dim, keepdims])Return the maximum of the dataset or maxima along given dimensions.

any(dataset[, dim, keepdims])Test whether any array element along a given axis evaluates to True.

arange([start, stop, step, dtype])Return evenly spaced values within a given interval.

argmax(dataset[, dim])Indexes of maximum of data along axis.

argmin(dataset[, dim])Indexes of minimum of data along axis.

around(dataset[, decimals])Evenly round to the given number of decimals.

Make data and mask (ndim >= 1) laid out in Fortran order in memory.

asls(dataset[, lamb, asymmetry, tol, max_iter])Asymmetric Least Squares Smoothing baseline correction.

astype([dtype])Cast the data to a specified type.

atleast_2d([inplace])Expand the shape of an array to make it at least 2D.

autosub(dataset, ref, *ranges[, dim, ...])Automatic subtraction of a reference to the dataset.

average(dataset[, dim, weights, keepdims])Compute the weighted average along the specified axis.

bartlett()def bartlett(dataset, **kwargs)Calculate Bartlett apodization (triangular window with end points at zero).

basc(dataset, *ranges, **kwargs)Compute a baseline corrected dataset using the Baseline class processor.

blackmanharris()def blackmanharris(dataset, ...)Calculate a minimum 4-term Blackman-Harris apodization.

clip(dataset[, a_min, a_max])Clip (limit) the values in a dataset.

Close a Matplotlib figure associated to this dataset.

component([select])Take selected components of an hypercomplex array (RRR, RIR, ...).

concatenate(*datasets, **kwargs)Concatenation of

NDDatasetobjects along a given axis.conj(dataset[, dim])Conjugate of the NDDataset in the specified dimension.

conjugate(dataset[, dim])Conjugate of the NDDataset in the specified dimension.

coord([dim])Return the coordinates along the given dimension.

coordmax(dataset[, dim])Find coordinates of the maximum of data along axis.

coordmin(dataset[, dim])Find oordinates of the mainimum of data along axis.

copy([deep, keepname])Make a disconnected copy of the current object.

cs(dataset[, pts, neg])Circular shift.

cumsum(dataset[, dim, dtype])Return the cumulative sum of the elements along a given axis.

dc(dataset, **kwargs)Time domain baseline correction.

Delete all coordinate settings.

denoise(dataset[, ratio])Denoise the data using a PCA method.

despike(dataset[, size, delta])Remove convex spike from the data using the katsumoto-ozaki procedure.

detrend(dataset[, order, breakpoints])Remove polynomial trend along a dimension from dataset.

diag(dataset[, offset])Extract a diagonal or construct a diagonal array.

diagonal(dataset[, offset, dim, dtype])Return the diagonal of a 2D array.

dot(a, b[, strict, out])Return the dot product of two NDDatasets.

download_nist_ir(CAS[, index])Upload IR spectra from NIST webbook

dump(filename, **kwargs)Save the current object into compressed native spectrochempy format.

em([lb, shifted, lb, shifted])Calculate exponential apodization.

empty(shape[, dtype])Return a new

NDDatasetof given shape and type, without initializing entries.empty_like(dataset[, dtype])Return a new uninitialized

NDDataset.eye(N[, M, k, dtype])Return a 2-D array with ones on the diagonal and zeros elsewhere.

fft(dataset[, size, sizeff, inv, ppm])Apply a complex fast fourier transform.

find_peaks(dataset[, height, window_length, ...])Wrapper and extension of

scpy.signal.find_peaks.fromfunction(cls, function[, shape, dtype, ...])Construct a nddataset by executing a function over each coordinate.

fromiter(iterable[, dtype, count])Create a new 1-dimensional array from an iterable object.

fsh(dataset, pts, **kwargs)Frequency shift by Fourier transform.

fsh2(dataset, pts, **kwargs)Frequency Shift by Fourier transform.

full(shape[, fill_value, dtype])Return a new

NDDatasetof given shape and type, filled withfill_value.full_like(dataset[, fill_value, dtype])Return a

NDDatasetof fill_value.general_hamming([alpha])Calculate generalized Hamming apodization.

geomspace(start, stop[, num, endpoint, dtype])Return numbers spaced evenly on a log scale (a geometric progression).

get_axis(*args, **kwargs)Helper function to determine an axis index whatever the syntax used (axis index or dimension names).

get_baseline(dataset, *ranges, **kwargs)Compute a baseline using the Baseline class processor.

get_labels([level])Get the labels at a given level.

gm([gb, lb, shifted, gb, lb, shifted])Calculate lorentz-to-gauss apodization.

hamming(dataset, **kwargs)Calculate generalized Hamming (== Happ-Genzel) apodization.

hann(dataset, **kwargs)Return a Hann window.

ht(dataset[, N])Hilbert transform.

identity(n[, dtype])Return the identity

NDDatasetof a given shape.ifft(dataset[, size])Apply a inverse fast fourier transform.

is_units_compatible(other)Check the compatibility of units with another object.

ito(other[, force])Inplace scaling to different units.

Inplace rescaling to base units.

Quantity scaled in place to reduced units, inplace.

linspace(cls, start, stop[, num, endpoint, ...])Return evenly spaced numbers over a specified interval.

load(filename, **kwargs)Open data from a '*.scp' (NDDataset) or '*.pscp' (Project) file.

Upload the classical "iris" dataset.

logspace(cls, start, stop[, num, endpoint, ...])Return numbers spaced evenly on a log scale.

ls(dataset[, pts])Left shift and zero fill.

max(dataset[, dim, keepdims])Return the maximum of the dataset or maxima along given dimensions.

mc(dataset)Modulus calculation.

mean(dataset[, dim, dtype, keepdims])Compute the arithmetic mean along the specified axis.

min(dataset[, dim, keepdims])Return the maximum of the dataset or maxima along given dimensions.

ones(shape[, dtype])Return a new

NDDatasetof given shape and type, filled with ones.ones_like(dataset[, dtype])Return

NDDatasetof ones.pipe(func, *args, **kwargs)Apply func(self, *args, **kwargs).

pk(dataset[, phc0, phc1, exptc, pivot])Linear phase correction.

pk_exp(dataset[, phc0, pivot, exptc])Exponential Phase Correction.

plot([method])Generic plot function.

plot_1D(_PLOT1D_DOC)def plot_1D(dataset[, ...])Plot of one-dimensional data.

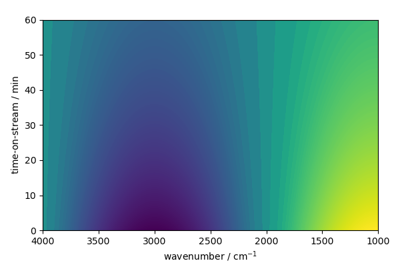

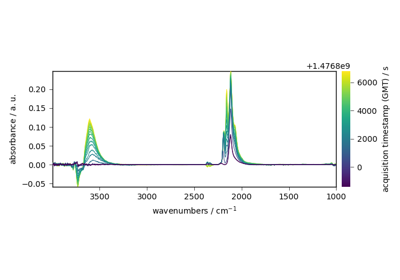

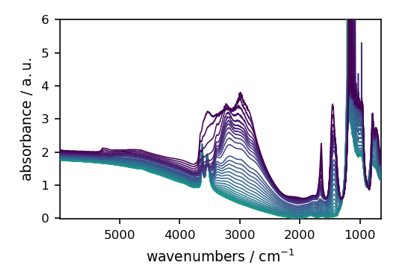

plot_2D(_PLOT2D_DOC)def plot_2D(dataset[, ...])Plot of 2D array.

plot_3D(_PLOT3D_DOC)def plot_3D(dataset[, ...])Plot of 2D array as 3D plot

plot_bar(dataset, *args, **kwargs)Plot a 1D dataset with bars.

plot_image(dataset, *args, **kwargs)Plot a 2D dataset as an image plot.

plot_map(dataset, *args, **kwargs)Plot a 2D dataset as a contoured map.

plot_multiple(datasets[, method, pen, ...])Plot a series of 1D datasets as a scatter plot with optional lines between markers.

plot_pen(dataset, *args, **kwargs)Plot a 1D dataset with solid pen by default.

plot_scatter(dataset, *args, **kwargs)Plot a 1D dataset as a scatter plot (points can be added on lines).

plot_scatter_pen(dataset, *args, **kwargs)Plot a 1D dataset with solid pen by default.

plot_stack(dataset, *args, **kwargs)Plot a 2D dataset as a stack plot.

plot_surface(dataset, *args, **kwargs)Plot a 2D dataset as a a 3D-surface.

plot_waterfall(dataset, *args, **kwargs)Plot a 2D dataset as a a 3D-waterfall plot.

ps(dataset)Power spectrum.

ptp(dataset[, dim, keepdims])Range of values (maximum - minimum) along a dimension.

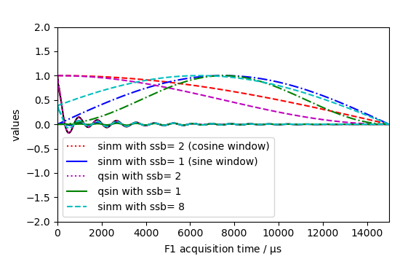

qsin(dataset[, ssb])Equivalent to

sp, with pow = 2 (squared sine bell apodization window).random([size, dtype])Return random floats in the half-open interval [0.0, 1.0).

read(*paths, **kwargs)Generic read method.

read_carroucell([directory])Open

.spafiles in a directory after a carroucell experiment.read_csv(*paths, **kwargs)Open a

.csvfile or a list of.csvfiles.read_ddr(*paths, **kwargs)Open a Surface Optics Corps.

read_dir([directory])Read an entire directory.

read_hdr(*paths, **kwargs)Open a Surface Optics Corps.

read_jcamp(*paths, **kwargs)Open Infrared

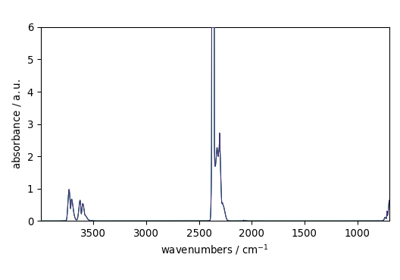

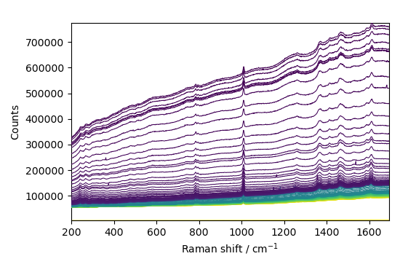

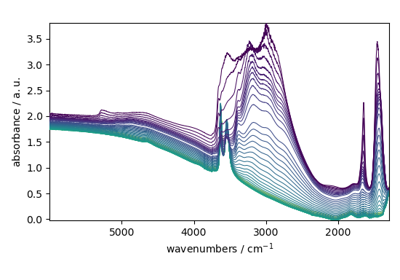

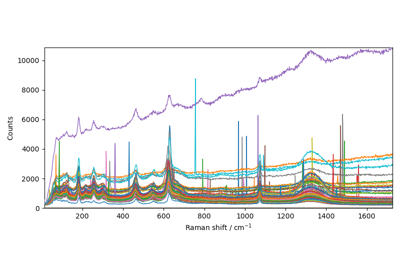

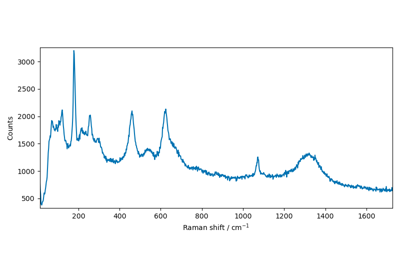

JCAMP-DXfiles with extension.jdxor.dx.read_labspec(*paths, **kwargs)Read a single Raman spectrum or a series of Raman spectra.

read_mat(*paths, **kwargs)Read a matlab file with extension

.matand return its content as a list.read_matlab(*paths, **kwargs)Read a matlab file with extension

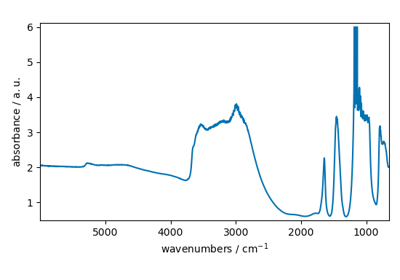

.matand return its content as a list.read_omnic(*paths, **kwargs)Open a Thermo Nicolet OMNIC file.

read_opus(*paths, **kwargs)Open Bruker OPUS file(s).

read_quadera(*paths, **kwargs)Read a Pfeiffer Vacuum's QUADERA mass spectrometer software file with extension

.asc.read_sdr(*paths, **kwargs)Open a Surface Optics Corps.

read_soc(*paths, **kwargs)Read a Surface Optics Corps.

read_spa(*paths, **kwargs)Open a Thermo Nicolet file or a list of files with extension

.spa.read_spc(*paths, **kwargs)Read GRAMS/Thermo Scientific Galactic files or a list of files with extension

.spc.read_spg(*paths, **kwargs)Open a Thermo Nicolet file or a list of files with extension

.spg.read_srs(*paths, **kwargs)Open a Thermo Nicolet file or a list of files with extension

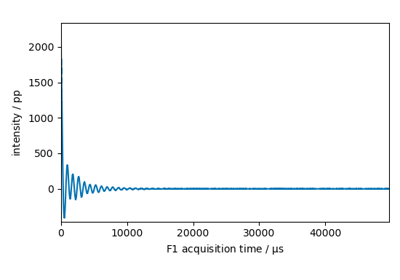

.srs.read_topspin(*paths, **kwargs)Open Bruker TOPSPIN (NMR) dataset.

read_wdf(*paths, **kwargs)Read a single Raman spectrum or a series of Raman spectra.

read_wire(*paths, **kwargs)Read a single Raman spectrum or a series of Raman spectra.

read_zip(*paths, **kwargs)Open a zipped list of data files.

Remove all masks previously set on this array.

roll(dataset[, pts, neg])Roll dimensions.

round(dataset[, decimals])Evenly round to the given number of decimals.

round_(dataset[, decimals])Evenly round to the given number of decimals.

rs(dataset[, pts])Right shift and zero fill.

rubberband(dataset)Rubberband baseline correction.

save(**kwargs)Save the current object in SpectroChemPy format.

save_as([filename])Save the current

NDDatasetin SpectroChemPy format (*.scp).savgol(dataset[, size, order])Savitzky-Golay filter.

savgol_filter(*args, **kwargs)Savitzky-Golay filter.

set_complex([inplace])Set the object data as complex.

set_coordset(*args, **kwargs)Set one or more coordinates at once.

set_coordtitles(*args, **kwargs)Set titles of the one or more coordinates.

set_coordunits(*args, **kwargs)Set units of the one or more coordinates.

set_hypercomplex([inplace])Alias of set_quaternion.

set_quaternion([inplace])Alias of set_quaternion.

simps([replace])An alias of

simpsonkept for backwards compatibility.simpson(dataset, *args, **kwargs)Integrate using the composite Simpson's rule.

sine(dataset, *args, **kwargs)Strictly equivalent to

sp.sinm(dataset[, ssb])Equivalent to

sp, with pow = 1 (sine bell apodization window).smooth(dataset[, size, window])Smooth the data using a window with requested size.

snip(dataset[, snip_width])Simple Non-Iterative Peak (SNIP) detection algorithm

sort(**kwargs)Return the dataset sorted along a given dimension.

sp([ssb, pow, ssb, pow])Calculate apodization with a Sine window multiplication.

squeeze(*dims[, inplace])Remove single-dimensional entries from the shape of a NDDataset.

stack(*datasets)Stack of

NDDatasetobjects along a new dimension.std(dataset[, dim, dtype, ddof, keepdims])Compute the standard deviation along the specified axis.

sum(dataset[, dim, dtype, keepdims])Sum of array elements over a given axis.

swapaxes(dim1, dim2[, inplace])Alias of

swapdims.swapdims(dim1, dim2[, inplace])Interchange two dimensions of a NDDataset.

take(indices, **kwargs)Take elements from an array.

to(other[, inplace, force])Return the object with data rescaled to different units.

to_array()Return a numpy masked array.

to_base_units([inplace])Return an array rescaled to base units.

to_reduced_units([inplace])Return an array scaled in place to reduced units.

Convert a NDDataset instance to an

DataArrayobject.transpose(*dims[, inplace])Permute the dimensions of a NDDataset.

trapezoid(dataset, **kwargs)Integrate using the composite trapezoidal rule.

trapz([replace])An alias of

trapezoidkept for backwards compatibility.triang()def triang(dataset, **kwargs)Calculate triangular apodization with non-null extremities and maximum value normalized to 1.

var(dataset[, dim, dtype, ddof, keepdims])Compute the variance along the specified axis.

whittaker(dataset[, lamb, order])Smooth the data using the Whittaker smoothing algorithm.

write(dataset[, filename])Write the current dataset.

write_csv(*args, **kwargs)Write a dataset in CSV format.

write_excel(*args, **kwargs)This method is an alias of

write_excel.write_jcamp(*args, **kwargs)Write a dataset in JCAMP-DX format.

write_mat(*args, **kwargs)This method is an alias of

write_matlab.write_matlab(*args, **kwargs)This method is an alias of

write_matlab.write_xls(*args, **kwargs)This method is an alias of

write_excel.zeros(shape[, dtype])Return a new

NDDatasetof given shape and type, filled with zeros.zeros_like(dataset[, dtype])Return a

NDDatasetof zeros.zf(dataset[, size, mid])Zero fill to given size.

zf_auto(dataset[, mid])Zero fill to next largest power of two.

zf_double(dataset, n[, mid])Zero fill by doubling original data size once or multiple times.

zf_size(dataset[, size, mid])Zero fill to given size.

Attributes Documentation

- II¶

The array with imaginary-imaginary component of hypercomplex 2D data.

(Readonly property).

- RR¶

The array with real component in both dimension of hypercomplex 2D

data.This readonly property is equivalent to the

realproperty.

- acquisition_date¶

Acquisition date.

- author¶

Creator of the dataset (str).

- ax¶

The main matplotlib axe associated to this dataset.

- axT¶

The matplotlib axe associated to the transposed dataset.

- axec¶

Matplotlib colorbar axe associated to this dataset.

- axecT¶

Matplotlib colorbar axe associated to the transposed dataset.

- axex¶

Matplotlib projection x axe associated to this dataset.

- axey¶

Matplotlib projection y axe associated to this dataset.

- comment¶

Provides a comment (Alias to the description attribute).

- coordset¶

CoordSetinstance.Contains the coordinates of the various dimensions of the dataset. It’s a readonly property. Use set_coords to change one or more coordinates at once.

- coordtitles¶

List of the

Coordtitles.Read only property. Use set_coordtitle to eventually set titles.

- coordunits¶

List of the

Coordunits.Read only property. Use set_coordunits to eventually set units.

- created¶

Creation date object (Datetime).

- description¶

Provides a description of the underlying data (str).

- dimensionless¶

True if the

dataarray is dimensionless - Readonly property (bool).Notes

Dimensionlessis different ofunitlesswhich means no unit.

- dims¶

Names of the dimensions (list).

The name of the dimensions are ‘x’, ‘y’, ‘z’…. depending on the number of dimension.

- directory¶

Current directory for this dataset.

ReadOnly property - automatically set when the filename is updated if it contains a parent on its path.

- divider¶

Matplotlib plot divider.

- dtype¶

Return the data type.

- fig¶

Matplotlib figure associated to this dataset.

- fignum¶

Matplotlib figure associated to this dataset.

- filename¶

Current filename for this dataset.

- filetype¶

Type of current file.

- has_defined_name¶

True is the name has been defined (bool).

- has_units¶

True if the

dataarray have units - Readonly property (bool).See also

unitlessTrue if the data has no unit.

dimensionlessTrue if the data have dimensionless units.

- history¶

Describes the history of actions made on this array (List of strings).

- id¶

Object identifier - Readonly property (str).

- is_complex¶

True if the ‘data’ are complex (Readonly property).

- is_empty¶

True if the

dataarray is empty or size=0, and if no label are present.Readonly property (bool).

- labels¶

An array of labels for

data(ndarrayof str).An array of objects of any type (but most generally string), with the last dimension size equal to that of the dimension of data. Note that’s labelling is possible only for 1D data. One classical application is the labelling of coordinates to display informative strings instead of numerical values.

- limits¶

Range of the data.

- local_timezone¶

Return the local timezone.

- m¶

Data array (

ndarray).If there is no data but labels, then the labels are returned instead of data.

- magnitude¶

Data array (

ndarray).If there is no data but labels, then the labels are returned instead of data.

- meta¶

Additional metadata (

Meta).

- modified¶

Date of modification (readonly property).

- name¶

A user-friendly name (str).

When no name is provided, the

idof the object is returned instead.

- ndaxes¶

A dictionary containing all the axes of the current figures.

- origin¶

Origin of the data.

e.g. spectrometer or software

- preferences¶

Metainstance object - Additional metadata.

- roi¶

Region of interest (ROI) limits (list).

- shape¶

A tuple with the size of each dimension - Readonly property.

The number of

dataelement on each dimension (possibly complex). For only labelled array, there is no data, so it is the 1D and the size is the size of the array of labels.

- size¶

Size of the underlying

dataarray - Readonly property (int).The total number of data elements (possibly complex or hypercomplex in the array).

- suffix¶

Filename suffix.

Read Only property - automatically set when the filename is updated if it has a suffix, else give the default suffix for the given type of object.

- timezone¶

Return the timezone information.

A timezone’s offset refers to how many hours the timezone is from Coordinated Universal Time (UTC).

In spectrochempy, all datetimes are stored in UTC, so that conversion must be done during the display of these datetimes using tzinfo.

- title¶

An user-friendly title (str).

When the title is provided, it can be used for labeling the object, e.g., axe title in a matplotlib plot.

Methods Documentation

- abs(dataset, dtype=None)[source]¶

Calculate the absolute value of the given NDDataset element-wise.

absis a shorthand for this function. For complex input, a + ib, the absolute value is \(\sqrt{ a^2 + b^2}\) .- Parameters

dataset (

NDDatasetor array-like) – Input array or object that can be converted to an array.dtype (dtype) – The type of the output array. If dtype is not given, infer the data type from the other input arguments.

- Returns

NDDataset– The absolute value of each element in dataset.

- absolute(dataset, dtype=None)[source]¶

Calculate the absolute value of the given NDDataset element-wise.

absis a shorthand for this function. For complex input, a + ib, the absolute value is \(\sqrt{ a^2 + b^2}\) .- Parameters

dataset (

NDDatasetor array-like) – Input array or object that can be converted to an array.dtype (dtype) – The type of the output array. If dtype is not given, infer the data type from the other input arguments.

- Returns

NDDataset– The absolute value of each element in dataset.

- add_coordset(*coords, dims=None, **kwargs)[source]¶

Add one or a set of coordinates from a dataset.

- Parameters

*coords (iterable) – Coordinates object(s).

dims (list) – Name of the coordinates.

**kwargs – Optional keyword parameters passed to the coordset.

- align(dataset, *others, **kwargs)[source]¶

Align individual

NDDatasetalong given dimensions using various methods.- Parameters

dataset (

NDDataset) – Dataset on which we want to align other objects.*others (

NDDataset) – Objects to align.dim (str. Optional, default=’x’) – Along which axis to perform the alignment.

dims (list of str, optional, default=None) – Align along all dims defined in dims (if dim is also defined, then dims have higher priority).

method (enum [‘outer’, ‘inner’, ‘first’, ‘last’, ‘interpolate’], optional, default=’outer’) – Which method to use for the alignment.

If align is defined :

‘outer’ means that a union of the different coordinates is achieved (missing values are masked).

‘inner’ means that the intersection of the coordinates is used.

‘first’ means that the first dataset is used as reference.

‘last’ means that the last dataset is used as reference.

‘interpolate’ means that interpolation is performed relative to the first dataset.

interpolate_method (enum [‘linear’,’pchip’]. Optional, default=’linear’) – Method of interpolation to performs for the alignment.

interpolate_sampling (‘auto’, int or float. Optional, default=’auto’) – Values:

‘auto’ : sampling is determined automatically from the existing data.

int : if an integer values is specified, then the sampling interval for the interpolated data will be split in this number of points.

float : If a float value is provided, it determines the interval between the interpolated data.

coord (

Coord, optional, default=None) – Coordinates to use for alignment. Ignore those corresponding to the dimensions to align.copy (bool, optional, default=True) – If False then the returned objects will share memory with the original objects, whenever it is possible : in principle only if reindexing is not necessary.

- Returns

tuple of

NDDataset– Same objects as datasets with dimensions aligned.- Raises

ValueError – Issued when the dimensions given in

dimordimsargument are not compatibles (units, titles, etc.).

- all(dataset, dim=None, keepdims=False)[source]¶

Test whether all array elements along a given axis evaluate to True.

- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

dim (None or int or str, optional) – Axis or axes along which a logical AND reduction is performed. The default (

axis=None) is to perform a logical AND over all the dimensions of the input array.axismay be negative, in which case it counts from the last to the first axis.keepdims (bool, optional) – If this is set to True, the axes which are reduced are left in the result as dimensions with size one. With this option, the result will broadcast correctly against the input array. If the default value is passed, then

keepdimswill not be passed through to theallmethod of sub-classes ofndarray, however any non-default value will be. If the sub-class’ method does not implementkeepdimsany exceptions will be raised.

- Returns

all – A new boolean or array is returned unless

outis specified, in which case a reference tooutis returned.

See also

anyTest whether any element along a given axis evaluates to True.

Notes

Not a Number (NaN), positive infinity and negative infinity evaluate to

Truebecause these are not equal to zero.

- amax(dataset, dim=None, keepdims=False, **kwargs)[source]¶

Return the maximum of the dataset or maxima along given dimensions.

- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

dim (None or int or dimension name or tuple of int or dimensions, optional) – Dimension or dimensions along which to operate. By default, flattened input is used. If this is a tuple, the maximum is selected over multiple dimensions, instead of a single dimension or all the dimensions as before.

keepdims (bool, optional) – If this is set to True, the axes which are reduced are left in the result as dimensions with size one. With this option, the result will broadcast correctly against the input array.

- Returns

amax – Maximum of the data. If

dimis None, the result is a scalar value. Ifdimis given, the result is an array of dimensionndim - 1.

See also

aminThe minimum value of a dataset along a given dimension, propagating NaNs.

minimumElement-wise minimum of two datasets, propagating any NaNs.

maximumElement-wise maximum of two datasets, propagating any NaNs.

fmaxElement-wise maximum of two datasets, ignoring any NaNs.

fminElement-wise minimum of two datasets, ignoring any NaNs.

argmaxReturn the indices or coordinates of the maximum values.

argminReturn the indices or coordinates of the minimum values.

Notes

For dataset with complex or hypercomplex type type, the default is the value with the maximum real part.

- amin(dataset, dim=None, keepdims=False, **kwargs)[source]¶

Return the maximum of the dataset or maxima along given dimensions.

- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

dim (None or int or dimension name or tuple of int or dimensions, optional) – Dimension or dimensions along which to operate. By default, flattened input is used. If this is a tuple, the minimum is selected over multiple dimensions, instead of a single dimension or all the dimensions as before.

keepdims (bool, optional) – If this is set to True, the dimensions which are reduced are left in the result as dimensions with size one. With this option, the result will broadcast correctly against the input array.

- Returns

amin – Minimum of the data. If

dimis None, the result is a scalar value. Ifdimis given, the result is an array of dimensionndim - 1.

See also

amaxThe maximum value of a dataset along a given dimension, propagating NaNs.

minimumElement-wise minimum of two datasets, propagating any NaNs.

maximumElement-wise maximum of two datasets, propagating any NaNs.

fmaxElement-wise maximum of two datasets, ignoring any NaNs.

fminElement-wise minimum of two datasets, ignoring any NaNs.

argmaxReturn the indices or coordinates of the maximum values.

argminReturn the indices or coordinates of the minimum values.

- any(dataset, dim=None, keepdims=False)[source]¶

Test whether any array element along a given axis evaluates to True.

Returns single boolean unless

dimis notNone- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

dim (None or int or tuple of ints, optional) – Axis or axes along which a logical OR reduction is performed. The default (

axis=None) is to perform a logical OR over all the dimensions of the input array.axismay be negative, in which case it counts from the last to the first axis.keepdims (bool, optional) – If this is set to True, the axes which are reduced are left in the result as dimensions with size one. With this option, the result will broadcast correctly against the input array. If the default value is passed, then

keepdimswill not be passed through to theanymethod of sub-classes ofndarray, however any non-default value will be. If the sub-class’ method does not implementkeepdimsany exceptions will be raised.

- Returns

any – A new boolean or

ndarrayis returned.

See also

allTest whether all array elements along a given axis evaluate to True.

- arange(start=0, stop=None, step=None, dtype=None, **kwargs)[source]¶

Return evenly spaced values within a given interval.

Values are generated within the half-open interval [start, stop).

- Parameters

start (number, optional) – Start of interval. The interval includes this value. The default start value is 0.

stop (number) – End of interval. The interval does not include this value, except in some cases where step is not an integer and floating point round-off affects the length of out. It might be prefereble to use inspace in such case.

step (number, optional) – Spacing between values. For any output out, this is the distance between two adjacent values, out[i+1] - out[i]. The default step size is 1. If step is specified as a position argument, start must also be given.

dtype (dtype) – The type of the output array. If dtype is not given, infer the data type from the other input arguments.

**kwargs – Keywords argument used when creating the returned object, such as units, name, title, …

- Returns

arange – Array of evenly spaced values.

See also

linspaceEvenly spaced numbers with careful handling of endpoints.

Examples

>>> scp.arange(1, 20.0001, 1, units='s', name='mycoord') NDDataset: [float64] s (size: 20)

- around(dataset, decimals=0)[source]¶

Evenly round to the given number of decimals.

- Parameters

dataset (

NDDataset) – Input dataset.decimals (int, optional) – Number of decimal places to round to (default: 0). If decimals is negative, it specifies the number of positions to the left of the decimal point.

- Returns

rounded_array – NDDataset containing the rounded values. The real and imaginary parts of complex numbers are rounded separately. The result of rounding a float is a float. If the dataset contains masked data, the mask remain unchanged.

See also

numpy.round,around,spectrochempy.round,spectrochempy.around,methods.,ceil,fix,floor,rint,trunc

- asls(dataset, lamb=1e5, asymmetry=0.05, tol=1e-3, max_iter=50)[source]¶

Asymmetric Least Squares Smoothing baseline correction.

This method is based on the work of Eilers and Boelens ([Eilers and Boelens, 2005]).

- Parameters

dataset (

NDDataset) – The input data.lamb (

float, optional, default:1e5) – The smoothness parameter for the AsLS method. Larger values make the baseline stiffer. Values should be in the range (0, 1e9)asymmetry (

float, optional, default:0.05,) – The asymmetry parameter for the AsLS method. It is typically between 0.001 and 0.1. 0.001 gives almost the same fit as the unconstrained least squares.tol = `float`, optional, default (1e-3) – The tolerance parameter for the AsLS method. Smaller values make the fitting better but potentially increases the number of iterations and the running time. Values should be in the range (0, 1).

max_iter = `int`, optional, default (50) – Maximum number of AsLS iteration.

- Returns

NDDataset– The baseline corrected dataset.

See also

BaselineManual baseline correction processor.

get_baselineCompuute a baseline using the

Baselineclass.bascMake a baseline correction using the

Baselineclass.snipPerform a Simple Non-Iterative Peak (SNIP) detection algorithm.

rubberbandPerform a Rubberband baseline correction.

autosubPerform an automatic subtraction of reference.

detrendRemove polynomial trend along a dimension from dataset.

- astype(dtype=None, **kwargs)[source]¶

Cast the data to a specified type.

- Parameters

dtype (str or dtype) – Typecode or data-type to which the array is cast.

- atleast_2d(inplace=False)[source]¶

Expand the shape of an array to make it at least 2D.

- Parameters

inplace (bool, optional, default=`False`) – Flag to say that the method return a new object (default) or not (inplace=True).

- Returns

NDDataset– The input array, but with dimensions increased.

See also

squeezeThe inverse operation, removing singleton dimensions.

- autosub(dataset, ref, *ranges, dim='x', method='vardiff', return_coefs=False, inplace=False)[source]¶

Automatic subtraction of a reference to the dataset.

The subtraction coefficient are adjusted to either minimise the variance of the subtraction (method = ‘vardiff’) which will minimize peaks due to ref or minimize the sum of squares of the subtraction (method = ‘ssdiff’).

- Parameters

dataset (

NDDataset) – Dataset to which we want to subtract the reference data.ref (

NDDataset) – 1D reference data, with a size matching the axis to subtract. (axis parameter). # TODO : optionally use title of axis.*ranges (pair(s) of values) – Any number of pairs is allowed. Coord range(s) in which the variance is minimized.

dim (

strorint, optional, default=’x’) – Tells on which dimension to perform the subtraction. If dim is an integer it refers to the axis index.method (str, optional, default=’vardiff’) – ‘vardiff’: minimize the difference of the variance. ‘ssdiff’: minimize the sum of squares difference of sum of squares.

return_coefs (

bool, optional, default=`False`) – Returns the table of coefficients.inplace (

bool, optional, default=`False`) – True if the subtraction is done in place. In this case we do not need to catch the function output.

- Returns

Examples

>>> path_A = 'irdata/nh4y-activation.spg' >>> A = scp.read(path_A, protocol='omnic') >>> ref = A[0, :] # let's subtrack the first row >>> B = A.autosub(ref, [3900., 3700.], [1600., 1500.], inplace=False) >>> B NDDataset: [float64] a.u. (shape: (y:55, x:5549))

- average(dataset, dim=None, weights=None, keepdims=False)[source]¶

Compute the weighted average along the specified axis.

- Parameters

dataset (array_like) – Array containing data to be averaged.

dim (None or int or dimension name or tuple of int or dimensions, optional) – Dimension or dimensions along which to operate. By default, flattened input is used. If this is a tuple, the minimum is selected over multiple dimensions, instead of a single dimension or all the dimensions as before.

weights (array_like, optional) – An array of weights associated with the values in

dataset. Each value inacontributes to the average according to its associated weight. The weights array can either be 1-D (in which case its length must be the size ofdatasetalong the given axis) or of the same shape asdataset. Ifweights=None, then all data indatasetare assumed to have a weight equal to one. The 1-D calculation is:avg = sum(a * weights) / sum(weights)

The only constraint on

weightsis thatsum(weights)must not be 0.

- Returns

average, – Return the average along the specified axis.

- Raises

ZeroDivisionError – When all weights along axis are zero. See

numpy.ma.averagefor a version robust to this type of error.TypeError – When the length of 1D

weightsis not the same as the shape ofaalong axis.

See also

meanCompute the arithmetic mean along the specified axis.

Examples

>>> nd = scp.read('irdata/nh4y-activation.spg') >>> nd NDDataset: [float64] a.u. (shape: (y:55, x:5549)) >>> scp.average(nd) <Quantity(1.25085858, 'absorbance')> >>> m = scp.average(nd, dim='y') >>> m NDDataset: [float64] a.u. (size: 5549) >>> m.x Coord: [float64] cm⁻¹ (size: 5549) >>> m = scp.average(nd, dim='y', weights=np.arange(55)) >>> m.data array([ 1.789, 1.789, ..., 1.222, 1.22])

- bartlett()def bartlett(dataset, **kwargs)[source]¶

Calculate Bartlett apodization (triangular window with end points at zero).

For multidimensional NDDataset, the apodization is by default performed on the last dimension.

The data in the last dimension MUST be time-domain or dimensionless, otherwise an error is raised.

The Bartlett window is defined as

\[w(n) = \frac{2}{M-1} (\frac{M-1}{2} - |n - \frac{M-1}{2}|)\]where M is the number of point of the input dataset.

- Parameters

dataset (Dataset) – Input dataset.

**kwargs – Additional keyword parameters (see Other Parameters).

- Returns

apodized – Dataset.

apod_arr – The apodization array only if ‘retapod’ is True.

- Other Parameters

dim (str or int, keyword parameter, optional, default=’x’) – Specify on which dimension to apply the apodization method. If

dimis specified as an integer it is equivalent to the usualaxisnumpy parameter.inv (bool, keyword parameter, optional, default=False) – True for inverse apodization.

rev (bool, keyword parameter, optional, default=False) – True to reverse the apodization before applying it to the data.

inplace (bool, keyword parameter, optional, default=False) – True if we make the transform inplace. If False, the function return a new dataset.

retapod (bool, keyword parameter, optional, default=False) – True to return the apodization array along with the apodized object.

See also

triangA triangular window that does not touch zero at the ends.

- basc(dataset, *ranges, **kwargs)[source]¶

Compute a baseline corrected dataset using the Baseline class processor.

If no ranges is provided, the features limits are used. See

Baselinefor detailed information on the parameters.- Parameters

- Returns

NDDataset– The computed baseline corrected dataset

See also

BaselineManual baseline correction processor.

get_baselineCompuute a baseline using the

Baselineclass.aslsPerform an Asymmetric Least Squares Smoothing baseline correction.

snipPerform a Simple Non-Iterative Peak (SNIP) detection algorithm.

rubberbandPerform a Rubberband baseline correction.

autosubPerform an automatic subtraction of reference.

detrendRemove polynomial trend along a dimension from dataset.

Notes

For more flexibility and functionality, it is advised to use the Baseline class processor instead.

- blackmanharris()def blackmanharris(dataset, **kwargs)[source]¶

Calculate a minimum 4-term Blackman-Harris apodization.

For multidimensional NDDataset, the apodization is by default performed on the last dimension.

The data in the last dimension MUST be time-domain or dimensionless, otherwise an error is raised.

- Parameters

dataset (dataset) – Input dataset.

- Returns

apodized – Dataset.

apod_arr – The apodization array only if ‘retapod’ is True.

**kwargs – Additional keyword parameters (see Other Parameters)

- Other Parameters

dim (str or int, keyword parameter, optional, default=’x’) – Specify on which dimension to apply the apodization method. If

dimis specified as an integer it is equivalent to the usualaxisnumpy parameter.inv (bool, keyword parameter, optional, default=False) – True for inverse apodization.

rev (bool, keyword parameter, optional, default=False) – True to reverse the apodization before applying it to the data.

inplace (bool, keyword parameter, optional, default=False) – True if we make the transform inplace. If False, the function return a new datase

retapod (bool, keyword parameter, optional, default=False) – True to return the apodization array along with the apodized object.

- clip(dataset, a_min=None, a_max=None, **kwargs)[source]¶

Clip (limit) the values in a dataset.

Given an interval, values outside the interval are clipped to the interval edges. For example, if an interval of

[0, 1]is specified, values smaller than 0 become 0, and values larger than 1 become 1.No check is performed to ensure

a_min < a_max.- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

a_min (scalar or array_like or None) – Minimum value. If None, clipping is not performed on lower interval edge. Not more than one of

a_minanda_maxmay be None.a_max (scalar or array_like or None) – Maximum value. If None, clipping is not performed on upper interval edge. Not more than one of

a_minanda_maxmay be None. Ifa_minora_maxare array_like, then the three arrays will be broadcasted to match their shapes.

- Returns

clip – An array with the elements of

a, but where values <a_minare replaced witha_min, and those >a_maxwitha_max.

- component(select='REAL')[source]¶

Take selected components of an hypercomplex array (RRR, RIR, …).

- Parameters

select (str, optional, default=’REAL’) – If ‘REAL’, only real component in all dimensions will be selected. ELse a string must specify which real (R) or imaginary (I) component has to be selected along a specific dimension. For instance, a string such as ‘RRI’ for a 2D hypercomplex array indicated that we take the real component in each dimension except the last one, for which imaginary component is preferred.

- Returns

component – Component of the complex or hypercomplex array.

- concatenate(*datasets, **kwargs)[source]¶

Concatenation of

NDDatasetobjects along a given axis.Any number of

NDDatasetobjects can be concatenated (by default the last on the last dimension). For this operation to be defined the following must be true :all inputs must be valid

NDDatasetobjects;units of data must be compatible

concatenation is along the axis specified or the last one;

along the non-concatenated dimensions, shapes must match.

- Parameters

*datasets (positional

NDDatasetarguments) – The dataset(s) to be concatenated to the current dataset. The datasets must have the same shape, except in the dimension corresponding to axis (the last, by default).**kwargs – Optional keyword parameters (see Other Parameters).

- Returns

out – A

NDDatasetcreated from the contenations of theNDDatasetinput objects.- Other Parameters

dims (str, optional, default=’x’) – The dimension along which the operation is applied.

axis (int, optional) – The axis along which the operation is applied.

Examples

>>> A = scp.read('irdata/nh4y-activation.spg', protocol='omnic') >>> B = scp.read('irdata/nh4y-activation.scp') >>> C = scp.concatenate(A[10:], B[3:5], A[:10], axis=0) >>> A[10:].shape, B[3:5].shape, A[:10].shape, C.shape ((45, 5549), (2, 5549), (10, 5549), (57, 5549))

or

>>> D = A.concatenate(B, B, axis=0) >>> A.shape, B.shape, D.shape ((55, 5549), (55, 5549), (165, 5549))

>>> E = A.concatenate(B, axis=1) >>> A.shape, B.shape, E.shape ((55, 5549), (55, 5549), (55, 11098))

- conj(dataset, dim='x')[source]¶

Conjugate of the NDDataset in the specified dimension.

- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

dim (int, str, optional, default=(0,)) – Dimension names or indexes along which the method should be applied.

- Returns

conjugated – Same object or a copy depending on the

inplaceflag.

See also

conj,real,imag,RR,RI,IR,II,part,set_complex,is_complex

- conjugate(dataset, dim='x')[source]¶

Conjugate of the NDDataset in the specified dimension.

- Parameters

dataset (array_like) – Input array or object that can be converted to an array.

dim (int, str, optional, default=(0,)) – Dimension names or indexes along which the method should be applied.

- Returns

conjugated – Same object or a copy depending on the

inplaceflag.

See also

conj,real,imag,RR,RI,IR,II,part,set_complex,is_complex

- coord(dim='x')[source]¶

Return the coordinates along the given dimension.

- Parameters

dim (int or str) – A dimension index or name, default index =

x. If an integer is provided, it is equivalent to theaxisparameter for numpy array.- Returns

Coord– Coordinates along the given axis.

- copy(deep=True, keepname=False, **kwargs)[source]¶

Make a disconnected copy of the current object.

- Parameters

deep (bool, optional) – If True a deepcopy is performed which is the default behavior.

keepname (bool) – If True keep the same name for the copied object.

- Returns

object – An exact copy of the current object.

Examples

>>> nd1 = scp.NDArray([1. + 2.j, 2. + 3.j]) >>> nd1 NDArray: [complex128] unitless (size: 2) >>> nd2 = nd1 >>> nd2 is nd1 True >>> nd3 = nd1.copy() >>> nd3 is not nd1 True

- cs(dataset, pts=0.0, neg=False, **kwargs)[source]¶

Circular shift.

For multidimensional NDDataset, the shift is by default performed on the last dimension.

- Parameters

dataset (nddataset) – NDDataset to be shifted.

pts (int) – Number of points toshift.

neg (bool) – True to negate the shifted points.

- Returns

dataset – Dataset shifted.

- Other Parameters

dim (str or int, keyword parameter, optional, default=’x’) – Specify on which dimension to apply the shift method. If

dimis specified as an integer it is equivalent to the usualaxisnumpy parameter.inplace (bool, keyword parameter, optional, default=False) – True if we make the transform inplace. If False, the function return a new dataset.

See also

rollshift without zero filling.

- cumsum(dataset, dim=None, dtype=None)[source]¶

Return the cumulative sum of the elements along a given axis.

- Parameters

dataset (array_like) – Calculate the cumulative sum of these values.

dim (None or int or dimension name , optional) – Dimension or dimensions along which to operate. By default, flattened input is used.

dtype (dtype, optional) – Type to use in computing the standard deviation. For arrays of integer type the default is float64, for arrays of float types it is the same as the array type.

- Returns

sum – A new array containing the cumulative sum.

See also

Examples

>>> nd = scp.read('irdata/nh4y-activation.spg') >>> nd NDDataset: [float64] a.u. (shape: (y:55, x:5549)) >>> scp.sum(nd) <Quantity(381755.783, 'absorbance')> >>> scp.sum(nd, keepdims=True) NDDataset: [float64] a.u. (shape: (y:1, x:1)) >>> m = scp.sum(nd, dim='y') >>> m NDDataset: [float64] a.u. (size: 5549) >>> m.data array([ 100.7, 100.7, ..., 74, 73.98])

- dc(dataset, **kwargs)[source]¶

Time domain baseline correction.

- Parameters

dataset (nddataset) – The time domain daatset to be corrected.

kwargs (dict, optional) – Additional parameters.

- Returns

dc – DC corrected array.

- Other Parameters

len (float, optional) – Proportion in percent of the data at the end of the dataset to take into account. By default, 25%.

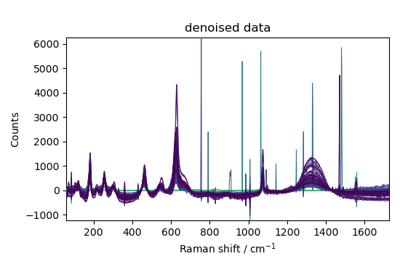

- denoise(dataset, ratio=99.8, **kwargs)[source]¶

Denoise the data using a PCA method.

Work only on 2D dataset.

- Parameters

dataset (

NDDatasetor a ndarray-like object) – Input object. Must have two dimensions.ratio (

float, optional, default: 99.8%) – Ratio of variance explained in %. The number of components selected for reconstruction is chosen automatically such that the amount of variance that needs to be explained is greater than the percentage specified byratio.**kwargs – Optional keyword parameters (see Other Parameters).

- Returns

NDDataset– Denoised 2D dataset- Other Parameters

dim (str or int, optional, default=’x’.) – Specify on which dimension to apply this method. If

dimis specified as an integer it is equivalent to the usualaxisnumpy parameter.log_level (int, optional, default: “WARNING”) – Set the logging level for the method.

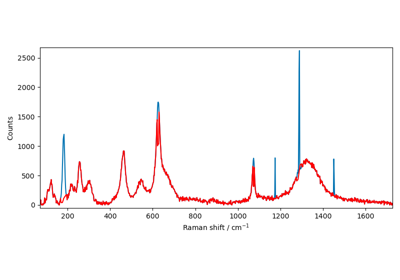

- despike(dataset, size=9, delta=2)[source]¶

Remove convex spike from the data using the katsumoto-ozaki procedure.

The method can be used to remove cosmic ray peaks from a spectrum.

The present implementation is based on the method is described in Katsumoto and Ozaki [2003]:

In the first step, the moving-average method is employed to detect the spike noise. The moving-average window should contain several data points along the abscissa that are larger than those of the spikes in the spectrum. If a window contains a spike, the value on the ordinate for the spike will show an anomalous deviation from the average for this window.

In the second step, each data point value identified as noise is replaced by the moving-averaged value.

In the third step, the moving-average process is applied to the new data set made by the second step.

In the fourth step, the spikes are identified by comparing the differences between the original spectra and the moving-averaged spectra calculated in the third step.

As a result, the proposed method realizes the reduction of convex spikes.

- Parameters

dataset (

NDDatasetor a ndarray-like object) – Input object.size (int, optional, default: 9) – Size of the moving average window.

delta (float, optional, default: 2) – Set the threshold for the detection of spikes. A spike is detected if its value is greater than

deltatimes the standard deviation of the difference between the original and the smoothed data.

- Returns

NDdataset– The despike dataset

- detrend(dataset, order='linear', breakpoints=[], **kwargs)[source]¶

Remove polynomial trend along a dimension from dataset.

Depending on the ``order``parameter,

detrendremoves the best-fit polynomial line (in the least squares sense) from the data and returns the remaining data.- Parameters

dataset (

NDDataset) – The input data.order (non-negative

intor astramong [‘constant’, ‘linear’, ‘quadratic’, ‘cubic’], optional, default:’linear’) – The order of the polynomial trend.If

order=0or'constant', the mean of data is subtracted to remove a shift trend.If

order=1or'linear'(default), the best straight-fit line is subtracted from data to remove a linear trend (drift).If order=2 or

order=quadratic, the best fitted nth-degree polynomial line is subtracted from data to remove a quadratic polynomial trend.order=ncan also be used to remove any nth-degree polynomial trend.

breakpoints (array_like, optional) – Breakpoints to define piecewise segments of the data, specified as a vector containing coordinate values or indices indicating the location of the breakpoints. Breakpoints are useful when you want to compute separate trends for different segments of the data.

- Returns

NDDataset– The detrended dataset.

See also

BaselineManual baseline correction processor.

get_baselineCompuute a baseline using the

Baselineclass.bascMake a baseline correction using the

Baselineclass.aslsPerform an Asymmetric Least Squares Smoothing baseline correction.

snipPerform a Simple Non-Iterative Peak (SNIP) detection algorithm.

rubberbandPerform a Rubberband baseline correction.

autosubPerform an automatic subtraction of reference.

- diag(dataset, offset=0, **kwargs)[source]¶

Extract a diagonal or construct a diagonal array.

See the more detailed documentation for

numpy.diagonalif you use this function to extract a diagonal and wish to write to the resulting array; whether it returns a copy or a view depends on what version of numpy you are using.- Parameters

dataset (array_like) – If

datasetis a 2-D array, return a copy of itsk-th diagonal. Ifdatasetis a 1-D array, return a 2-D array withvon thek-th. diagonal.offset (int, optional) – Diagonal in question. The default is 0. Use offset>0 for diagonals above the main diagonal, and offset<0 for diagonals below the main diagonal.

- Returns

diag – The extracted diagonal or constructed diagonal array.

- diagonal(dataset, offset=0, dim='x', dtype=None, **kwargs)[source]¶

Return the diagonal of a 2D array.

As we reduce a 2D to a 1D we must specified which is the dimension for the coordinates to keep!.

- Parameters

dataset (

NDDatasetor array-like) – Object from which to extract the diagonal.offset (int, optional) – Offset of the diagonal from the main diagonal. Can be positive or negative. Defaults to main diagonal (0).

dim (str, optional) – Dimension to keep for coordinates. By default it is the last (-1,

xor another name if the default dimension name has been modified).dtype (dtype, optional) – The type of the returned array.

**kwargs – Additional keyword parameters to be passed to the NDDataset constructor.

- Returns

diagonal – The diagonal of the input array.

See also

diagExtract a diagonal or construct a diagonal array.

Examples

>>> nd = scp.full((2, 2), 0.5, units='s', title='initial') >>> nd NDDataset: [float64] s (shape: (y:2, x:2)) >>> nd.diagonal(title='diag') NDDataset: [float64] s (size: 2)

- dot(a, b, strict=True, out=None)[source]¶

Return the dot product of two NDDatasets.

This function is the equivalent of

numpy.dotthat takes NDDataset as inputNote

Works only with 2-D arrays at the moment.

- Parameters

a, b (masked_array_like) – Inputs arrays.

strict (bool, optional) – Whether masked data are propagated (True) or set to 0 (False) for the computation. Default is False. Propagating the mask means that if a masked value appears in a row or column, the whole row or column is considered masked.

out (masked_array, optional) – Output argument. This must have the exact kind that would be returned if it was not used. In particular, it must have the right type, must be C-contiguous, and its dtype must be the dtype that would be returned for

dot(a,b). This is a performance feature. Therefore, if these conditions are not met, an exception is raised, instead of attempting to be flexible.

See also

numpy.dotEquivalent function for ndarrays.

numpy.ma.dotEquivalent function for masked ndarrays.

- download_nist_ir(CAS, index='all')[source]¶

Upload IR spectra from NIST webbook

- Parameters

CAS (int or str) – the CAS number, can be given as “XXXX-XX-X” (str), “XXXXXXX” (str), XXXXXXX (int)

index (str or int or tuple of ints) – If set to ‘all’ (default, import all available spectra for the compound corresponding to the index, or a single spectrum, or selected spectra.

- Returns

list of NDDataset or NDDataset – The dataset(s).

See also

readRead data from experimental data.

- dump(filename, **kwargs)[source]¶

Save the current object into compressed native spectrochempy format.

- Parameters

filename (str of

pathlibobject) – File name where to save the current object.

- em(lb="Hz", shifted="us")def em(dataset, lb=1, shifted=0, **kwargs)[source]¶

Calculate exponential apodization.

For multidimensional NDDataset, the apodization is by default performed on the last dimension.

The data in the last dimension MUST be time-domain, or an error is raised.

Functional form of apodization window :

\[em(t) = \exp(- e (t-t_0) )\]where

\[e = \pi * lb\]- Parameters

dataset (Dataset) – Input dataset.

lb (float or

Quantity, optional, default=1 Hz) – Exponential line broadening, If it is not a quantity with units, it is assumed to be a broadening expressed in Hz.shifted (float or

quantity, optional, default=0 us) – Shift the data time origin by this amount. If it is not a quantity it is assumed to be expressed in the data units of the last dimension.

- Returns

apodized – Dataset.

apod_arr – The apodization array only if ‘retapod’ is True.

- Other Parameters

dim (str or int, keyword parameter, optional, default=’x’) – Specify on which dimension to apply the apodization method. If

dimis specified as an integer it is equivalent to the usualaxisnumpy parameter.inv (bool, keyword parameter, optional, default=False) – True for inverse apodization.

rev (bool, keyword parameter, optional, default=False) – True to reverse the apodization before applying it to the data.

inplace (bool, keyword parameter, optional, default=False) – True if we make the transform inplace. If False, the function return a new dataset.

retapod (bool, keyword parameter, optional, default=False) – True to return the apodization array along with the apodized object.

- empty(shape, dtype=None, **kwargs)[source]¶

Return a new

NDDatasetof given shape and type, without initializing entries.- Parameters

shape (int or tuple of int) – Shape of the empty array.

dtype (data-type, optional) – Desired output data-type.

**kwargs – Optional keyword parameters (see Other Parameters).

- Returns

empty – Array of uninitialized (arbitrary) data of the given shape, dtype, and order. Object arrays will be initialized to None.

- Other Parameters

units (str or ur instance) – Units of the returned object. If not provided, try to copy from the input object.

coordset (list or Coordset object) – Coordinates for the returned object. If not provided, try to copy from the input object.

See also

zeros_likeReturn an array of zeros with shape and type of input.

ones_likeReturn an array of ones with shape and type of input.

empty_likeReturn an empty array with shape and type of input.

full_likeFill an array with shape and type of input.

zerosReturn a new array setting values to zero.

onesReturn a new array setting values to 1.

fullFill a new array.

Notes

empty, unlikezeros, does not set the array values to zero, and may therefore be marginally faster. On the other hand, it requires the user to manually set all the values in the array, and should be used with caution.Examples

>>> scp.empty([2, 2], dtype=int, units='s') NDDataset: [int64] s (shape: (y:2, x:2))

- empty_like(dataset, dtype=None, **kwargs)[source]¶

Return a new uninitialized

NDDataset.The returned

NDDatasethave the same shape and type as a given array. Units, coordset, … can be added in kwargs.- Parameters

dataset (

NDDatasetor array-like) – Object from which to copy the array structure.dtype (data-type, optional) – Overrides the data type of the result.

**kwargs – Optional keyword parameters (see Other Parameters).

- Returns

emptylike – Array of

fill_valuewith the same shape and type asdataset.- Other Parameters

units (str or ur instance) – Units of the returned object. If not provided, try to copy from the input object.

coordset (list or Coordset object) – Coordinates for the returned object. If not provided, try to copy from the input object.

Notes

This function does not initialize the returned array; to do that use for instance

zeros_like,ones_likeorfull_likeinstead. It may be marginally faster than the functions that do set the array values.

- eye(N, M=None, k=0, dtype=float, **kwargs)[source]¶

Return a 2-D array with ones on the diagonal and zeros elsewhere.

- Parameters

N (int) – Number of rows in the output.

M (int, optional) – Number of columns in the output. If None, defaults to

N.k (int, optional) – Index of the diagonal: 0 (the default) refers to the main diagonal, a positive value refers to an upper diagonal, and a negative value to a lower diagonal.

dtype (data-type, optional) – Data-type of the returned array.

**kwargs – Other parameters to be passed to the object constructor (units, coordset, mask …).

- Returns

eye – NDDataset of shape (N,M) An array where all elements are equal to zero, except for the

k-th diagonal, whose values are equal to one.

See also

Examples

>>> scp.eye(2, dtype=int) NDDataset: [float64] unitless (shape: (y:2, x:2)) >>> scp.eye(3, k=1, units='km').values <Quantity([[ 0 1 0] [ 0 0 1] [ 0 0 0]], 'kilometer')>

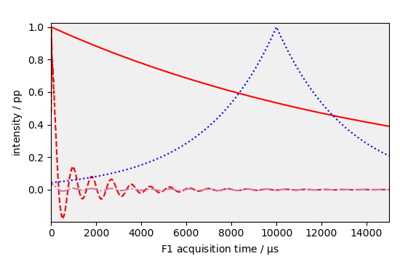

- fft(dataset, size=None, sizeff=None, inv=False, ppm=True, **kwargs)[source]¶

Apply a complex fast fourier transform.

For multidimensional NDDataset, the apodization is by default performed on the last dimension.

The data in the last dimension MUST be in time-domain (or without dimension) or an error is raised.

To make reverse Fourier transform, i.e., from frequency to time domain, use the

iffttransform (or equivalently, theinv=Trueparameters.- Parameters

dataset (

NDDataset) – The dataset on which to apply the fft transformation.size (int, optional) – Size of the transformed dataset dimension - a shorter parameter is

si. by default, the size is the closest power of two greater than the data size.sizeff (int, optional) – The number of effective data point to take into account for the transformation. By default it is equal to the data size, but may be smaller.

inv (bool, optional, default=False) – If True, an inverse Fourier transform is performed - size parameter is not taken into account.

ppm (bool, optional, default=True) – If True, and data are from NMR, then a ppm scale is calculated instead of frequency.

**kwargs – Optional keyword parameters (see Other Parameters).

- Returns

out – Transformed

NDDataset.- Other Parameters

dim (str or int, optional, default=’x’.) – Specify on which dimension to apply this method. If

dimis specified as an integer it is equivalent to the usualaxisnumpy parameter.inplace (bool, optional, default=False.) – True if we make the transform inplace. If False, the function return a new object

tdeff (int, optional) – Alias of sizeff (specific to NMR). If both sizeff and tdeff are passed, sizeff has the priority.

See also

ifftInverse Fourier transform.

- find_peaks(dataset, height=None, window_length=3, threshold=None, distance=None, prominence=None, width=None, wlen=None, rel_height=0.5, plateau_size=None, use_coord=True)[source]¶

Wrapper and extension of

scpy.signal.find_peaks.Find peaks inside a 1D

NDDatasetbased on peak properties. This function finds all local maxima by simple comparison of neighbouring values. Optionally, a subset of these peaks can be selected by specifying conditions for a peak’s properties.Warning

This function may return unexpected results for data containing NaNs. To avoid this, NaNs should either be removed or replaced.

- Parameters

dataset (

NDDataset) – A 1D NDDataset or a 2D NDdataset withlen(X.y) == 1.height (

floator array-like, optional, default:None) – Required height of peaks. Either a number,None, an array matchingxor a 2-element sequence of the former. The first element is always interpreted as the minimal and the second, if supplied, as the maximal required height.window_length (

int, default: 5) – The length of the filter window used to interpolate the maximum. window_length must be a positive odd integer. If set to one, the actual maximum is returned.threshold (

floator array-like, optional) – Required threshold of peaks, the vertical distance to its neighbouring samples. Either a number,None, an array matchingxor a 2-element sequence of the former. The first element is always interpreted as the minimal and the second, if supplied, as the maximal required threshold.distance (

float, optional) – Required minimal horizontal distance in samples between neighbouring peaks. Smaller peaks are removed first until the condition is fulfilled for all remaining peaks.prominence (

floator array-like, optional) – Required prominence of peaks. Either a number,None, an array matchingxor a 2-element sequence of the former. The first element is always interpreted as the minimal and the second, if supplied, as the maximal required prominence.width (

floator array-like, optional) – Required width of peaks in samples. Either a number,None, an array matchingxor a 2-element sequence of the former. The first element is always interpreted as the minimal and the second, if supplied, as the maximal required width. Floats are interpreted as width measured along the ‘x’ Coord; ints are interpreted as a number of points.wlen (

intorfloat, optional) – Used for calculation of the peaks prominences, thus it is only used if one of the argumentsprominenceorwidthis given. Floats are interpreted as measured along the ‘x’ Coord; ints are interpreted as a number of points. See argument len` inpeak_prominencesof the scipy documentation for a full description of its effects.rel_height (

float, optional,) – Used for calculation of the peaks width, thus it is only used ifwidthis given. See argumentrel_heightinpeak_widthsof the scipy documentation for a full description of its effects.plateau_size (

floator array-like, optional) – Required size of the flat top of peaks in samples. Either a number,None, an array matchingxor a 2-element sequence of the former. The first element is always interpreted as the minimal and the second, if supplied as the maximal required plateau size. Floats are interpreted as measured along the ‘x’ Coord; ints are interpreted as a number of points.use_coord (

bool, optional) – Set whether the x Coord (when it exists) should be used instead of indices for the positions and width. If True, the units of the other parameters are interpreted according to the coordinates.

- Returns

peaks (

ndarray) – Indices of peaks indatasetthat satisfy all given conditions.properties (

dict) – A dictionary containing properties of the returned peaks which were calculated as intermediate results during evaluation of the specified conditions:peak_heightsIf

heightis given, the height of each peak indataset.

left_thresholds,right_thresholdsIf

thresholdis given, these keys contain a peaks vertical distance to its neighbouring samples.

prominences,right_bases,left_basesIf

prominenceis given, these keys are accessible. Seescipy.signal.peak_prominencesfor a full description of their content.

width_heights,left_ips,right_ipsIf

widthis given, these keys are accessible. Seescipy.signal.peak_widthsfor a full description of their content.

- plateau_sizes, left_edges’, ‘right_edges’

If

plateau_sizeis given, these keys are accessible and contain the indices of a peak’s edges (edges are still part of the plateau) and the calculated plateau sizes.

To calculate and return properties without excluding peaks, provide the open interval

(None, None)as a value to the appropriate argument (excludingdistance).

- Warns

PeakPropertyWarning – Raised if a peak’s properties have unexpected values (see

peak_prominencesandpeak_widths).

See also

find_peaks_cwtIn

scipy.signal: Find peaks using the wavelet transformation.peak_prominencesIn

scipy.signal: Directly calculate the prominence of peaks.peak_widthsIn

scipy.signal: Directly calculate the width of peaks.

Notes

In the context of this function, a peak or local maximum is defined as any sample whose two direct neighbours have a smaller amplitude. For flat peaks (more than one sample of equal amplitude wide) the index of the middle sample is returned (rounded down in case the number of samples is even). For noisy signals the peak locations can be off because the noise might change the position of local maxima. In those cases consider smoothing the signal before searching for peaks or use other peak finding and fitting methods (like

scipy.signal.find_peaks_cwt).Some additional comments on specifying conditions:

Almost all conditions (excluding

distance) can be given as half-open or closed intervals, e.g1or(1, None)defines the half-open interval \([1, \infty]\) while(None, 1)defines the interval \([-\infty, 1]\). The open interval(None, None)can be specified as well, which returns the matching properties without exclusion of peaks.The border is always included in the interval used to select valid peaks.

For several conditions the interval borders can be specified with arrays matching

datasetin shape which enables dynamic constrains based on the sample position.The conditions are evaluated in the following order:

plateau_size,height,threshold,distance,prominence,width. In most cases this order is the fastest one because faster operations are applied first to reduce the number of peaks that need to be evaluated later.While indices in

peaksare guaranteed to be at leastdistancesamples apart, edges of flat peaks may be closer than the alloweddistance.Use

wlento reduce the time it takes to evaluate the conditions forprominenceorwidthifdatasetis large or has many local maxima (seescipy.signal.peak_prominences).

Examples

>>> dataset = scp.read("irdata/nh4y-activation.spg") >>> X = dataset[0, 1800.0:1300.0] >>> peaks, properties = X.find_peaks(height=1.5, distance=50.0, width=0.0) >>> len(peaks.x) 2 >>> peaks.x.values <Quantity([ 1644 1455], 'centimeter^-1')> >>> properties["peak_heights"][0] <Quantity(2.26663446, 'absorbance')> >>> properties["widths"][0] <Quantity(38.729003, 'centimeter^-1')>

- fromfunction(cls, function, shape=None, dtype=float, units=None, coordset=None, **kwargs)[source]¶

Construct a nddataset by executing a function over each coordinate.

The resulting array therefore has a value

fn(x, y, z)at coordinate(x, y, z).- Parameters

function (callable) – The function is called with N parameters, where N is the rank of

shapeor from the providedCoordSet.shape ((N,) tuple of ints, optional) – Shape of the output array, which also determines the shape of the coordinate arrays passed to

function. It is optional only ifCoordSetis None.dtype (data-type, optional) – Data-type of the coordinate arrays passed to

function. By default,dtypeis float.units (str, optional) – Dataset units. When None, units will be determined from the function results.

coordset (

CoordSetinstance, optional) – If provided, this determine the shape and coordinates of each dimension of the returnedNDDataset. If shape is also passed it will be ignored.**kwargs – Other kwargs are passed to the final object constructor.

- Returns

fromfunction – The result of the call to